-

Notifications

You must be signed in to change notification settings - Fork 652

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Adding more precise scan separation feature #285

base: master

Are you sure you want to change the base?

Adding more precise scan separation feature #285

Conversation

|

@n-patiphon - It looks like CI is currently failing due to some linting errors. If you are using |

|

Also, @n-patiphon, I believe the same thing can be achieved through the |

|

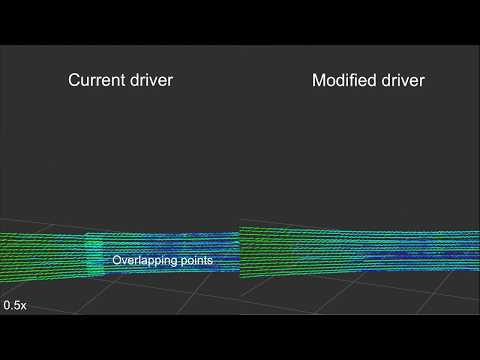

Hi @JWhitleyAStuff , As for the Besides, I went and rechecked the current master. As you suggested, I hard-coded the As you can see, the current master created a vertical dense patch shown in the red circle while this PR implementation did not. |

|

@n-patiphon - You mentioned that this "might be slower" due to more processing. Can you possibly do a simple CPU load test while running the node with and without these modifications and report the results? Also, what else would be required to apply this logic to the other models? |

|

@n-patiphon - There are also conflicts with the current |

|

@n-patiphon This is a very nice contribution. Thank you. Do you have any update about the CPU usage and impact on measurements timing? |

|

Hi, I just looked into the code. I would suggest to just add another container which filters by using the phi angle (with respect to the spherical coordinate system). The container could buffer the extra points and add them - if necessary - in the setup method to the next cloud. I think this should be a much more elegant way and it should not consume more processing time / power. Or, am I missing something? Cheers |

|

@spuetz or @YoshuaNava Would either of you be interested in continuing the implementation of this feature? I'm not sure that MapIV is still a thing (my contact at TierIV says he hasn't heard anything about them for quite some time). |

|

Should be easy, I could do that in a couple of weeks. But I think I'll restart with the approach I suggested above. |

Once you have a new PR ready, I'll close this one. |

|

This modification is great, with this modification i get a predictable motion distortion. With original implementation i get an unpredictable motion distortion, which is resulted from the changing of beginning time of scan. I hope this modification could soon be available by VLP-32C. |

|

@n-patiphon @spuetz Pings all around. |

Hi all.

I'm working on some mapping projects at MapIV, Inc. (TierIV's subsidiary company). During the projects, I found a way to improve the current Velodyne ROS driver, so my team and I think it might be worth sharing here. If I'm missing something or I got something wrong, I would be more than happy to hear from you all :)

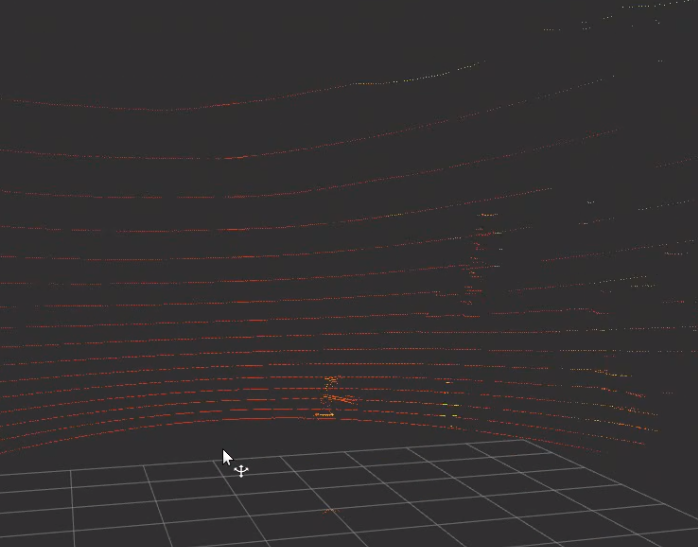

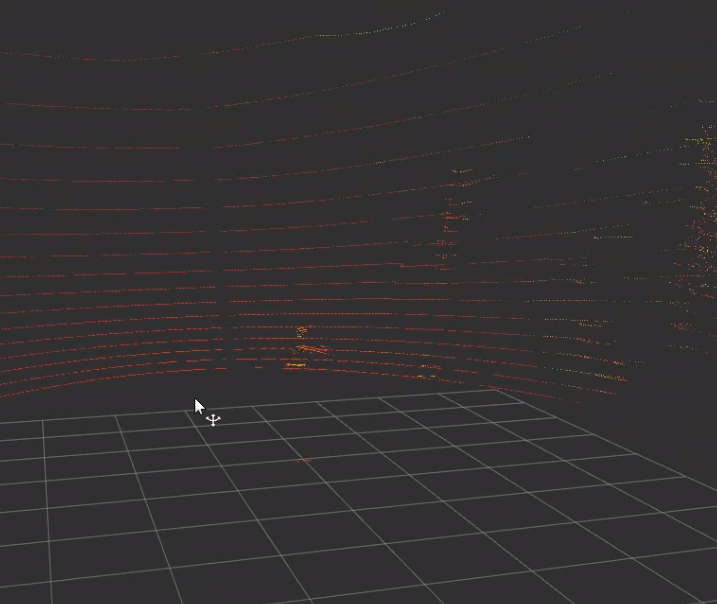

It seems to me that the current implementation only allows creating one full scan from a set of complete packets, say 76 packets/scan or 77 packets/scan. But sometimes, one full scan is actually equivalent to some complete packets and a fraction of it, for example, 76.3 packets/scan. In this case, rounding the number of packets/scan up or down will result in redundant points or missing points in a scan, respectively (see the figures below).

Redundant points

Missing points

So, what I did was basically modifying the driver to separate each scan more precisely. The separation mainly relies on azimuth data each point was taken (estimated using the azimuth of the packet and the firing timing). If the azimuth differences of three consecutive points have different signs, it is assumed that the last point belongs to a new scan. Therefore, it is stored in a residual point cloud waiting to be merged to the next scan. The code for this can be found here.

Also, it is important to ensure that the number of packets in velodyne_msgs::VelodyneScan is more than the final exact packets/scan. For example, if the final number of packets/scan is 76.3, velodyne_msgs::VelodyneScan needs to contain 77 packets in total. The code for this part can be found here.

Lastly, I also use the estimated timestamp of the last point in a scan as the scan timestamp instead of using the last packet timestamp as in the current implementation or the first packet as mentioned here.

Video comparing current driver and the modified driver

Limitations

Related discussions