-

Notifications

You must be signed in to change notification settings - Fork 1

Home

This wiki contains additional information and links for the Mnemosyne project.

- What Is Mnemosyne?

- What Is Mnemosyne For?

- What Problem Does Mnemosyne Solve?

- What Design Principles Underlie Mnemosyne?

- How Does Mnemosyne Accomplish Its Goals?

Mnemosyne is an open-source prototype CAD tool that performs various technology-aware optimizations to create efficient local memory micro-architectures.

The name comes from the Greek mythology: Mnemosyne is the Greek godness of memory. She represented the rote memorization required, before the introduction of writing, to preserve the stories of history and sagas of myth.

Mnemosyne generates a variety of memory elements (e.g., accelerator local memories, multi-port caches, and memory IPs) that can be easily integrated into different commercial synthesis flows for heterogeneous architecture design. More specifically, we target loosely-coupled accelerators and other hardware components that are organized as shown in the following figure.

Each accelerator is composed of two parts: the accelerator logic and the private local memory. The Accelerator Logic is composed of multiple concurrent hardware blocks to manage the data transfers (e.g. Input and Output) and perform the actual computation (e.g. Computation). The Private Local Memory (PLM) locally stores the data structures used by the accelerator to perform the computation so that they can be accessed with fixed latency by the accelerator logic. For doing this, it is organized in multiple banks implemented with area-efficient memory Intellectual Property (IP) blocks to offer the possibility of multiple concurrent accesses through different PLM ports. Each PLM port is connected to a process interface in the accelerator logic, which generates the corresponding memory request.

Modern computing systems feature an increasing number of heterogeneous components, including multiple general-purpose processors and special-purpose accelerators. Local memories are critical for the performance of these components and are often responsible for a large fraction of their area occupation and power dissipation.

Mnemosyne aims at generating an optimized memory subsystem for one or more accelerators by tailoring each of these memories to the specific characteristics of the particular component that is accessing the data it stores. The user provides information on the data structures to be stored in the PLMs, along with additional information on the number of memory interfaces for each accelerator and the compatibilities between the data structures. This information is used to share the memory IPs across accelerators whenever it is possible.

Mnemosyne efficiently reuses the physical banks for storing different types of data. Our approach is motivated by the following observations. First, when a data structure is not used, the associated PLM does not contain any useful data; the corresponding memory IPs can be reused for storing another data structure, thus reducing the total size of the memory subsystem. Second, in some technologies, the area of a single memory IP is smaller than the aggregated area of smaller IPs. For example, in an industrial 32nm CMOS technology, we experimented that a 1,024x32 SRAM is almost 40% smaller than the area of two 512x32 SRAMs, due to the replicated logic for address decoding. In these cases, it is possible to store two data structures in the same memory IP provided that there are not conflicts on the memory interfaces, i.e. the data structures are never accessed at the same time with the same memory operation. Next, we formalize these situations.

To understand when two data structures can share the same memory IPs, we recall the definition of data structure lifetime.

Definition. The lifetime of a data structure b is the interval time between the first memory-write and the last memory-read operations to the data structure.

Having two data structures with no overlapping lifetimes means that while operating on one data structure the other remains unused. Hence, we can use the same memory IPs to store both of them. On the other hand, even when two data structures have overlapping lifetimes, it is still possible to share memory interfaces to potentially reduce the accelerator area.

Definition. Two data structures bi and bj are address-space compatible when their lifetimes are not overlapping for the entire execution of the accelerator. They are memory-interface compatible when it is possible to define a total temporal ordering of the memory operations so that two read (resp. write) accesses to bi and bj never happen at the same time.

When two data structures are memory-interface compatible, memory-read and memory-write operations are never executed at the same time on the same data structure.

To correctly determine the number and capacity of the memory IPs for a data structure, we must analyze the data-structure access patterns and determine how to allocate the data.

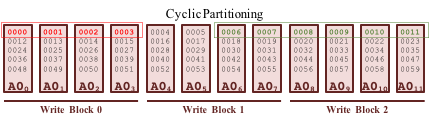

If the read patterns can be statically analyzed and are deterministic, it is possible to distribute the data structure across many blocks. This technique is called cyclic partitioning and assigns consecutive values of the data structure to different blocks.

Otherwise, it is necessary to create identical copies of the data (data duplication). This way, each memory-read interface is assigned to a different block of data and this guarantees to access the data without conflicts. The corresponding memory-write operations must create consistent copies of the data in each bank.

The compatibility information provided by the designer is combined into a Memory Compatibility Graph (MCG), which captures the sharing opportunities among the data structures.

Definition. The Memory Compatibility Graph is a graph MCG=(B,E) where each node b represents a data structure to be stored in the entire memory subsystem; an edge e connects two nodes when the corresponding data structures can be assigned to the same physical memory IPs. Each edge e is also annotated with the corresponding type of compatibility (e.g. address-space or memory-interface).

A MCG with no compatibility edges corresponds to implementing each data structure in a dedicated PLM element. Increasing the number of edges into the MCG corresponds to increasing the number of compatible data structures. This can potentially increase the number of banks that can be reused across different data structures. An accurate compatibility graph is the key to optimize the memory subsystem of the accelerators. In most cases, the designer has to analyze the application's behavior or modify the interconnection topology of the accelerator to increase sharing possibilities.

More details on how to specify the compatibility information in Mnemosyne can be found here.

To assist the system-level optimization of the memory subsystem for K accelerators, Mnemosyne implements the methodology shown in Fig.~\ref{fig:methodology}. Our methodology takes as input the SystemC descriptions of the accelerators (Accelerator Design1...k) and the information about compatibilities among their data structures (Compatibility Information).

We first use a commercial HLS tool to perform design space exploration and generate many alternative micro-architectures of each accelerator logic in order to optimize the performance (HLS). Each implementation is characterized by a set of data structures to be stored in the PLM and the corresponding requirements in terms of memory interfaces (Memory Requirements1...k). We combine the information on all data structures (Memory Requirements1...k), the information on compatibilities (Compatibility Information), and the characteristics of the memory IPs in the Memory Library to determine an optimized architecture for each PLM. After selecting an implementation for each component, we determine the combined requirements in terms of memory interfaces to access each data structure in order to guarantee performance and functional correctness (Technology-unaware Transformations1...k). First, we apply transformations for each accelerator (Local Technology-aware Transformations1...k). Then, we consider all accelerators at the same time and identify when the memory IPs can be reused across different data structures to minimize the cost of the entire memory subsystem (Global Technology-aware Transformations). As output, we produce the RTL description of the memory subsystem (Generation of RTL Architecture) that can be directly integrated with the RTL descriptions of the accelerator logic generated by the HLS tool.

A brief tutorial on Mnemosyne is available at Tutorial.