GroqCall.ai

- -- GroqCall is a proxy server that provides function calls for Groq's lightning-fast Language - Processing Unit (LPU) and other AI providers. Additionally, the upcoming FuncyHub will offer a - wide range of built-in functions, hosted on the cloud, making it easier to create AI assistants - without the need to maintain function schemas in the codebase or execute them through multiple - calls. -

-- Check github repo for more info: - https://github.com/unclecode/groqcall -

-Motivation 🚀

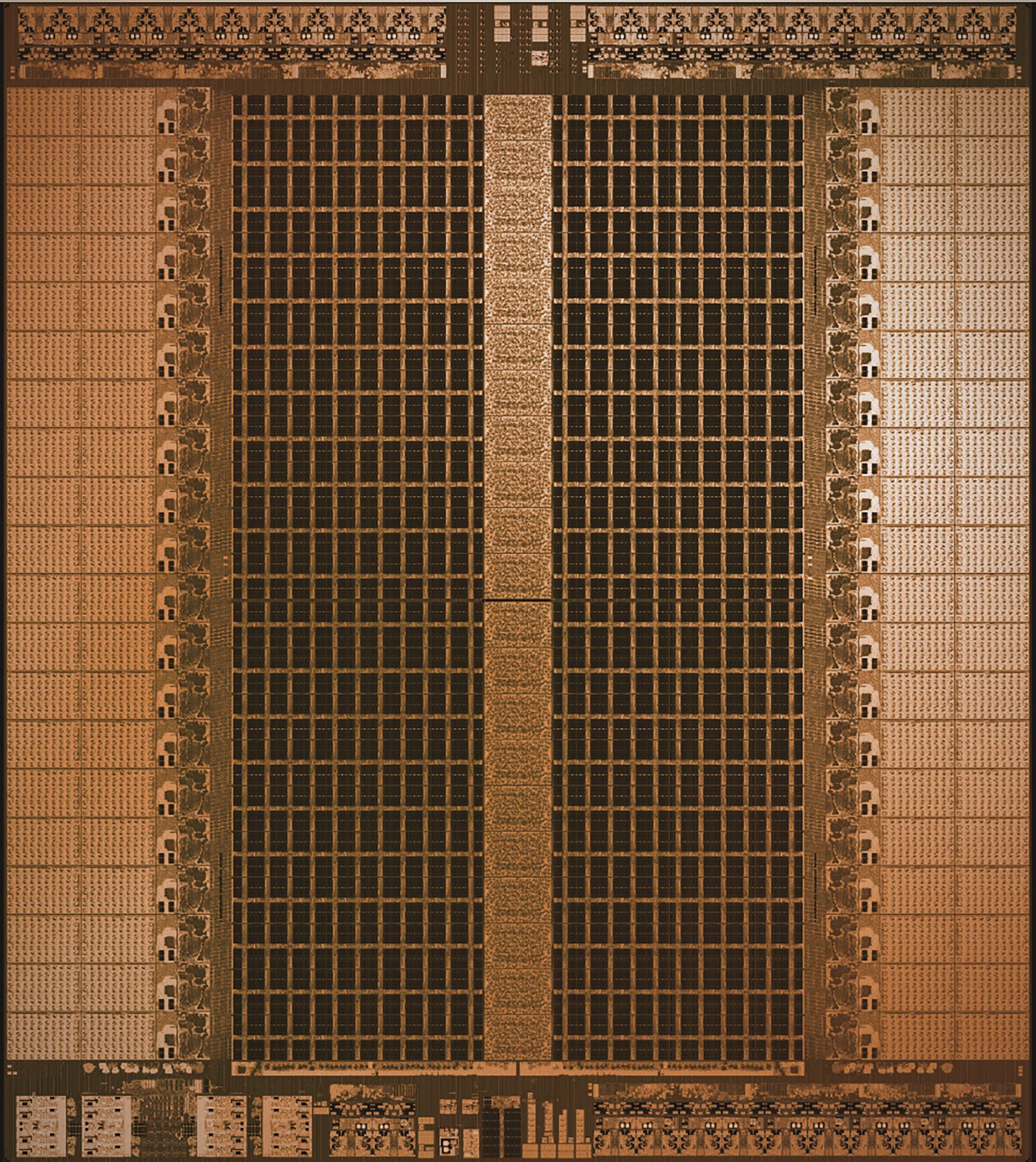

-- Groq is a startup that designs highly specialized processor chips aimed specifically at running - inference on large language models. They've introduced what they call the Language Processing - Unit (LPU), and the speed is astounding—capable of producing 500 to 800 tokens per second or - more. I've become a big fan of Groq and their community; -

-- I admire what they're doing. It feels like after discovering electricity, the next challenge is - moving it around quickly and efficiently. Groq is doing just that for Artificial Intelligence, - making it easily accessible everywhere. They've opened up their API to the cloud, but as of now, - they lack a function call capability. -

-- Unable to wait for this feature, I built a proxy that enables function calls using the OpenAI - interface, allowing it to be called from any library. This engineering workaround has proven to - be immensely useful in my company for various projects. Here's the link to the GitHub repository - where you can explore and play around with it. I've included some examples in this collaboration - for you to check out. -

- -

-  +

+  -

-  -

-