{t(whatTimeOfDay())}

++ + ドキュメント + +

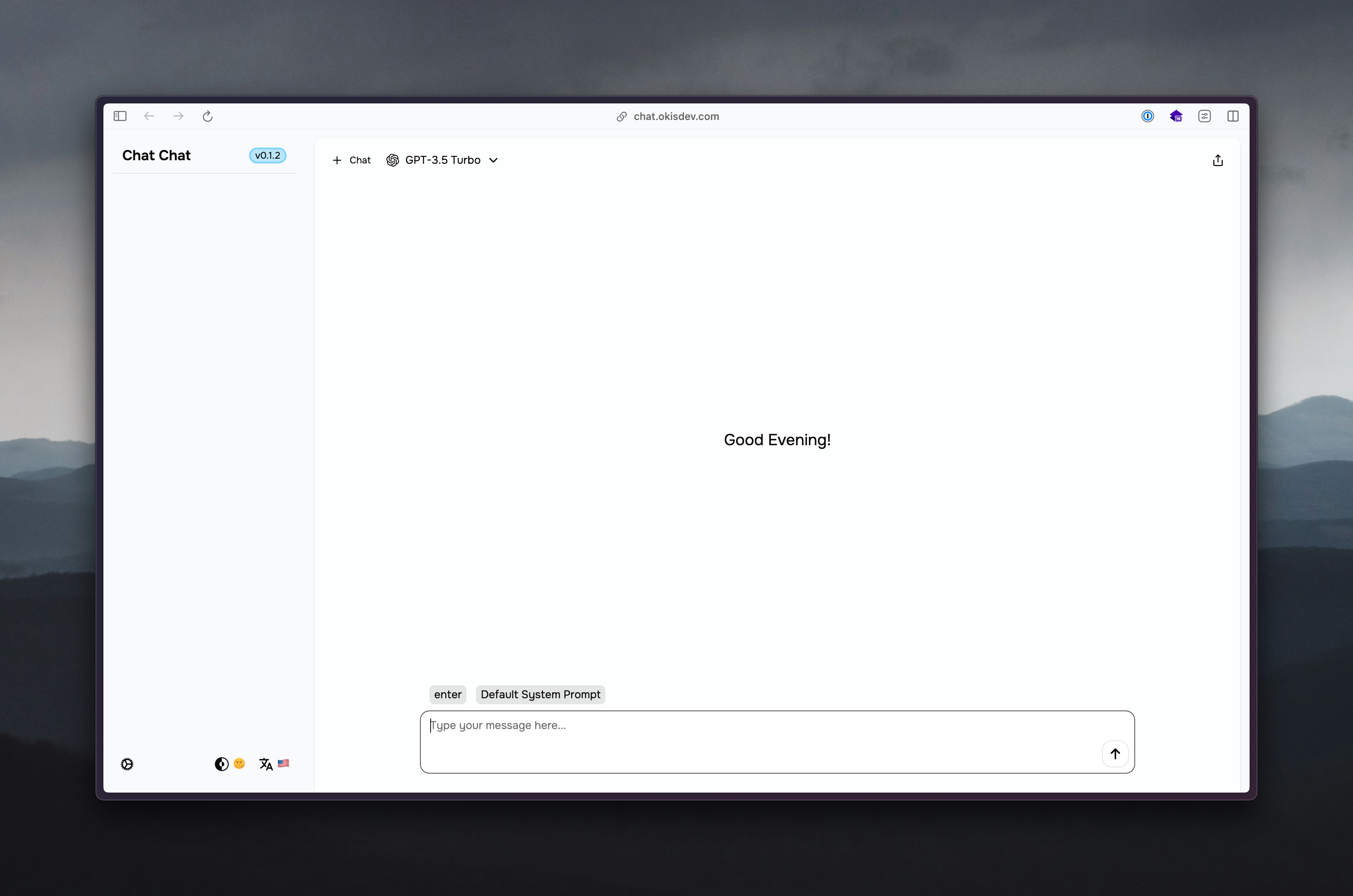

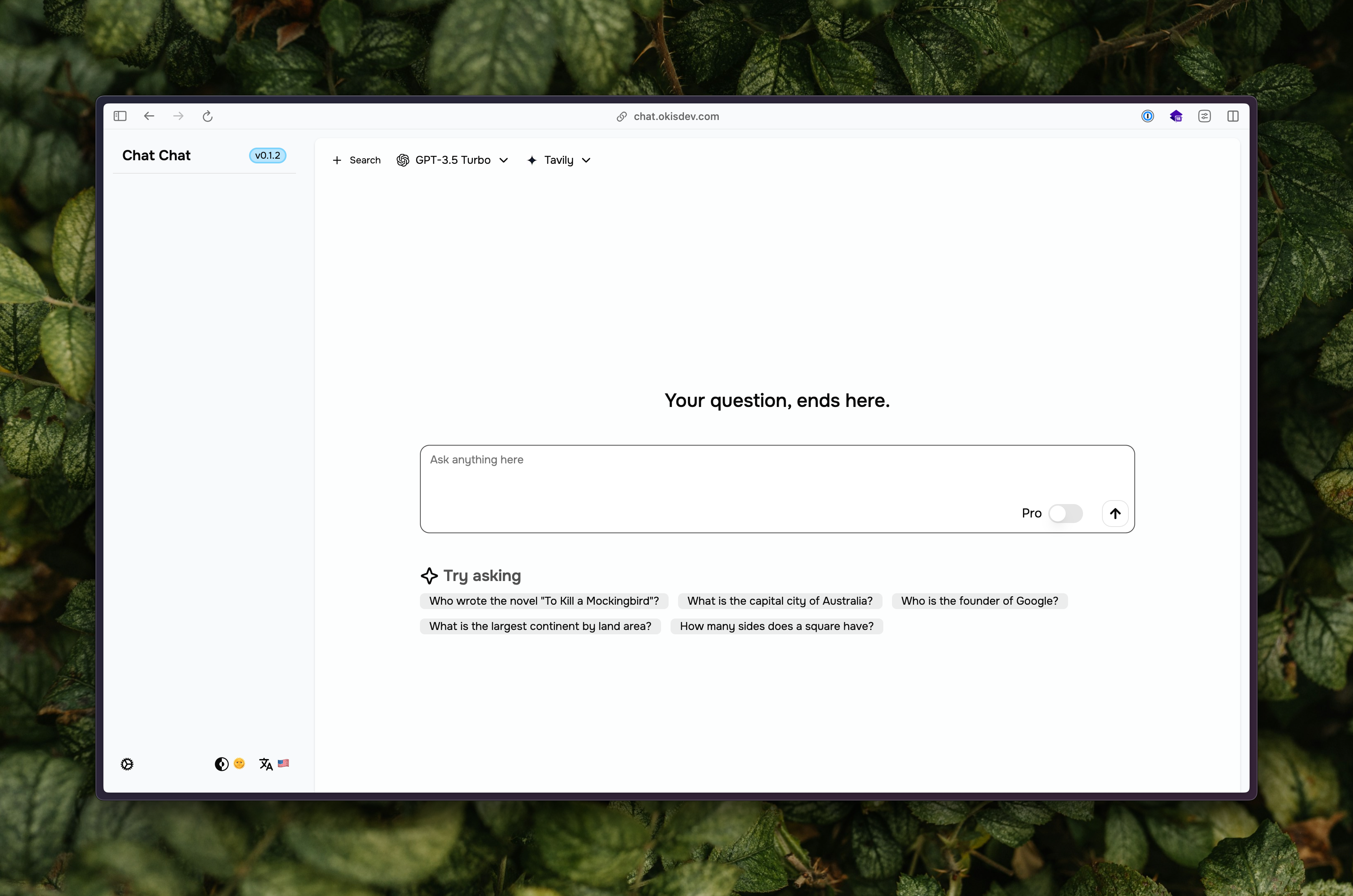

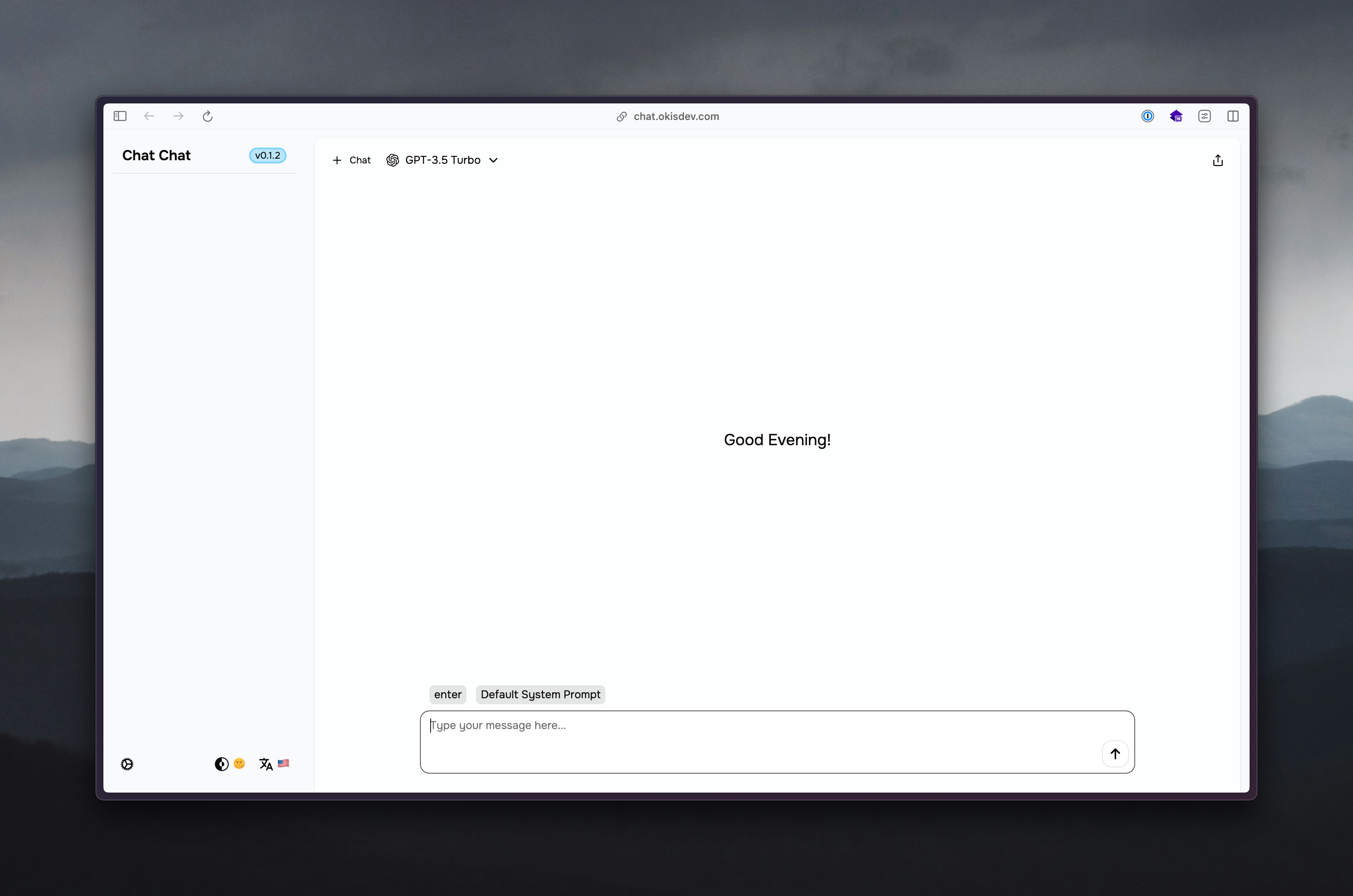

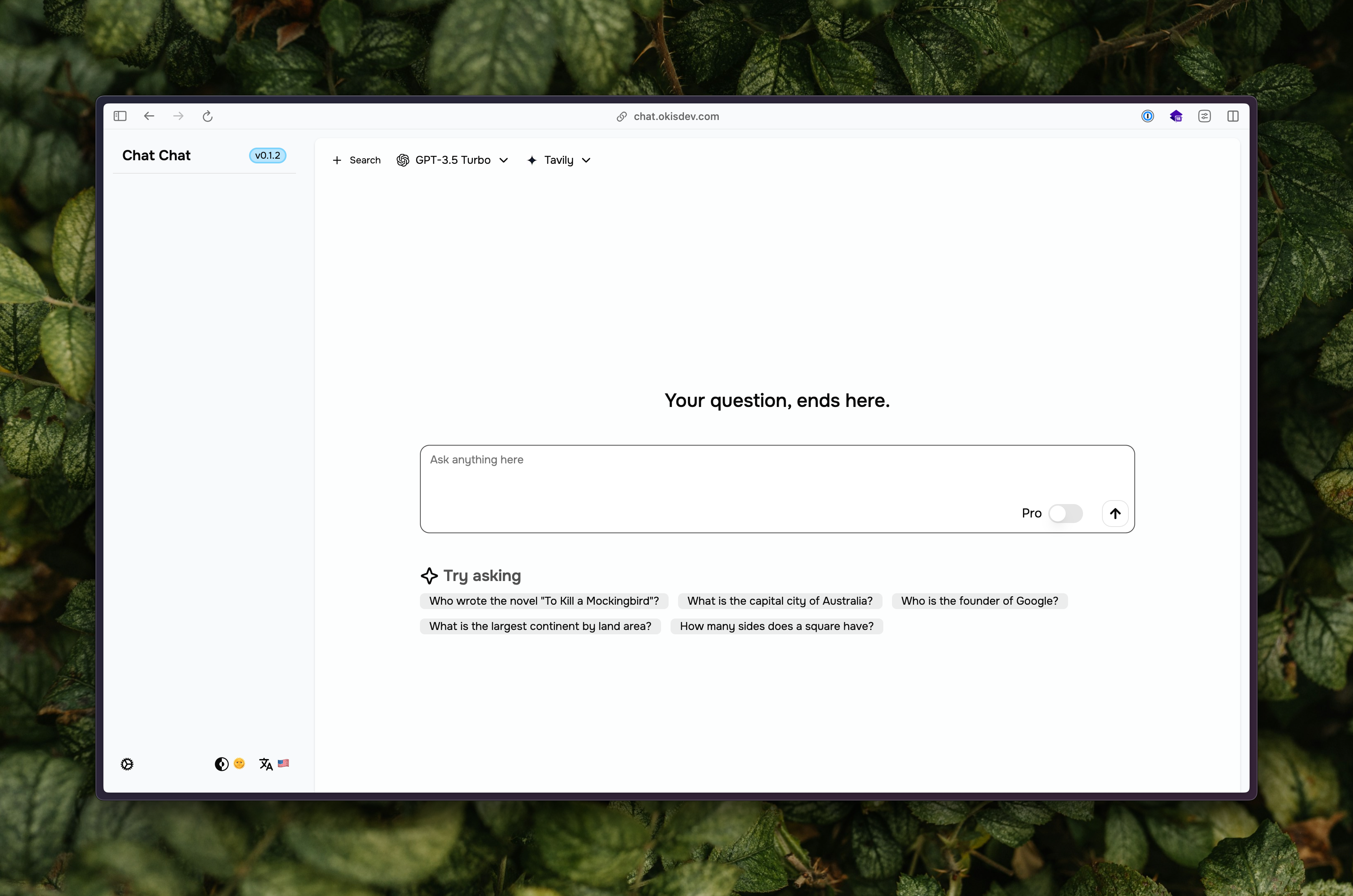

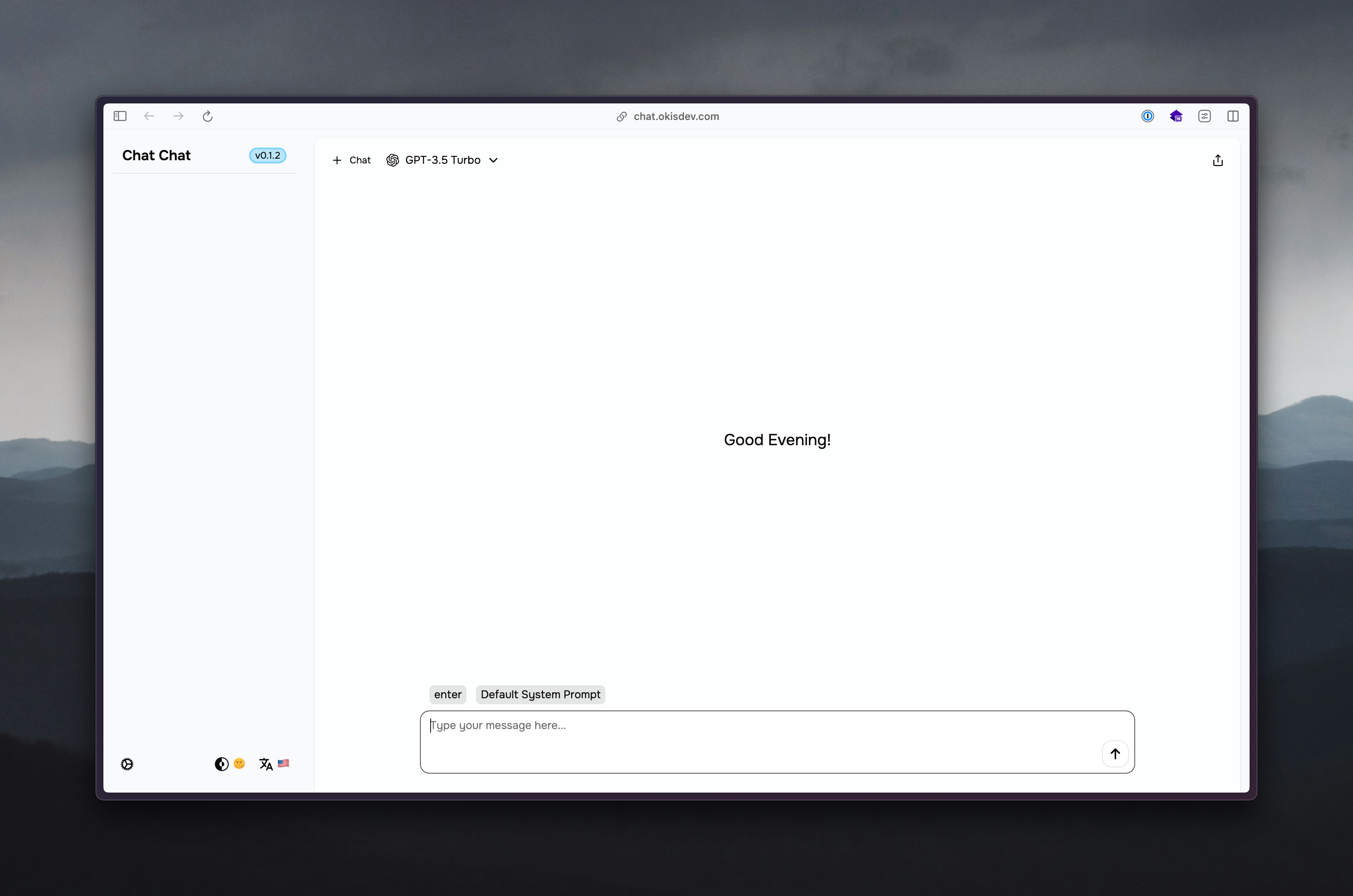

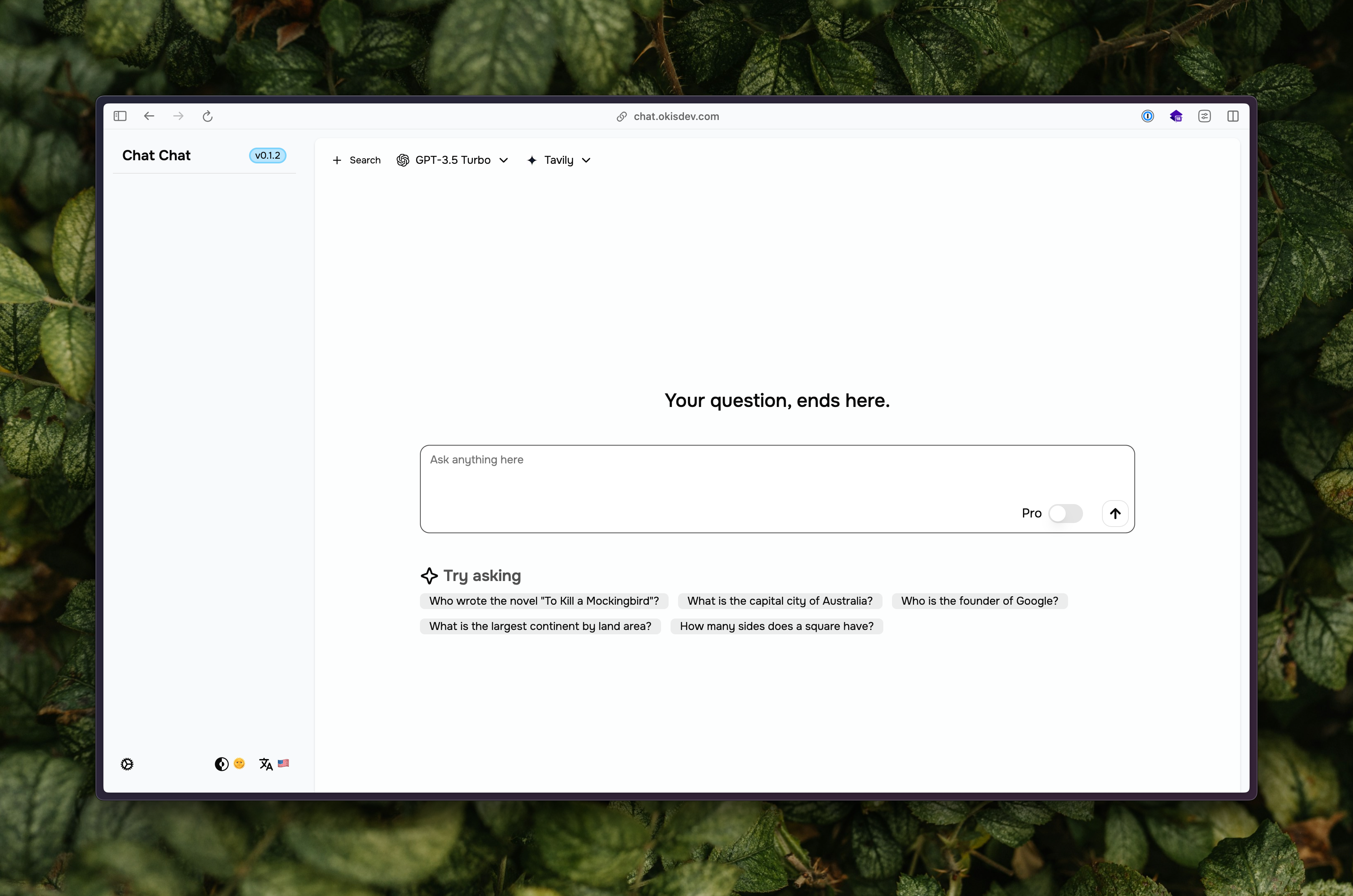

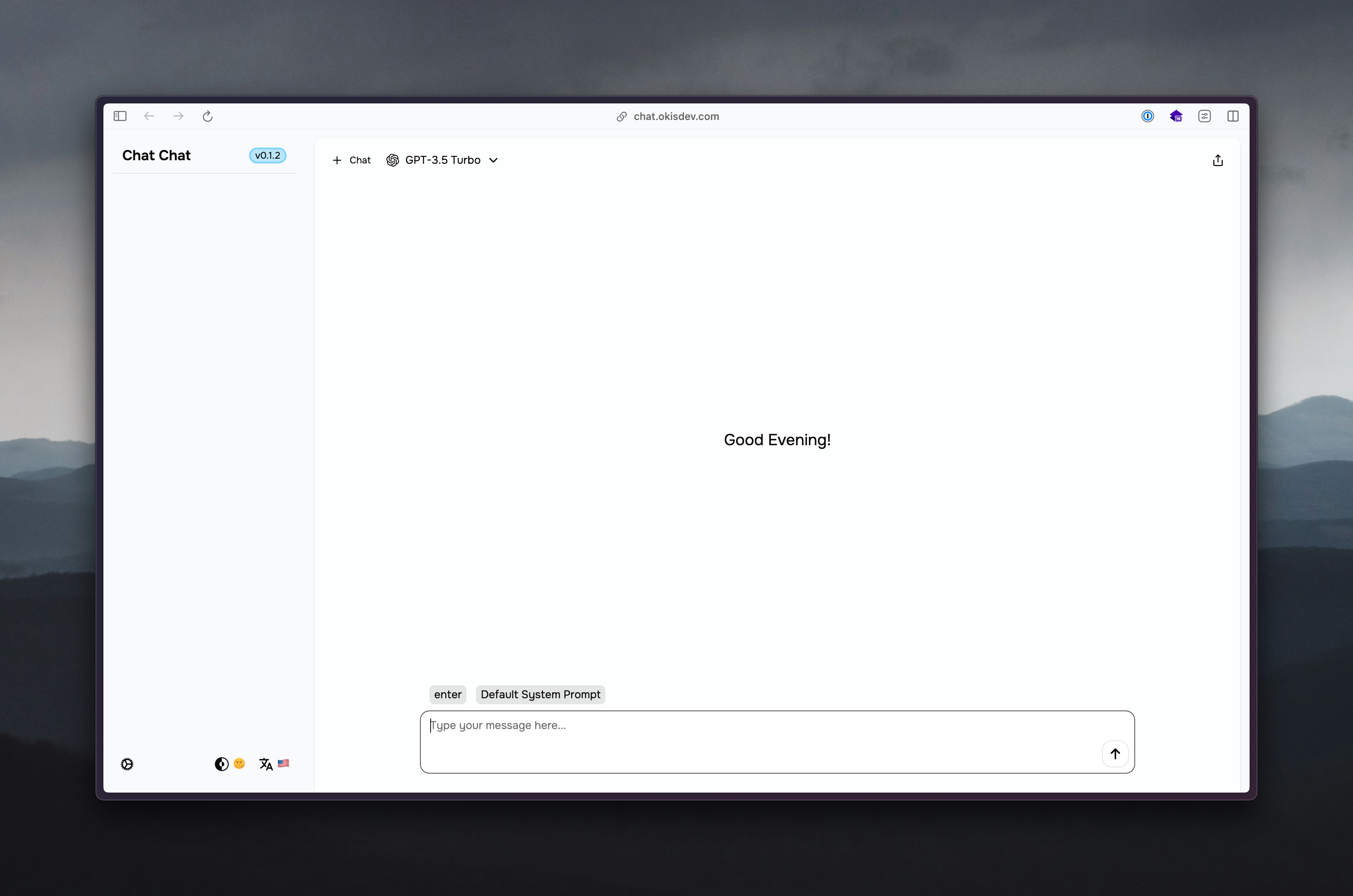

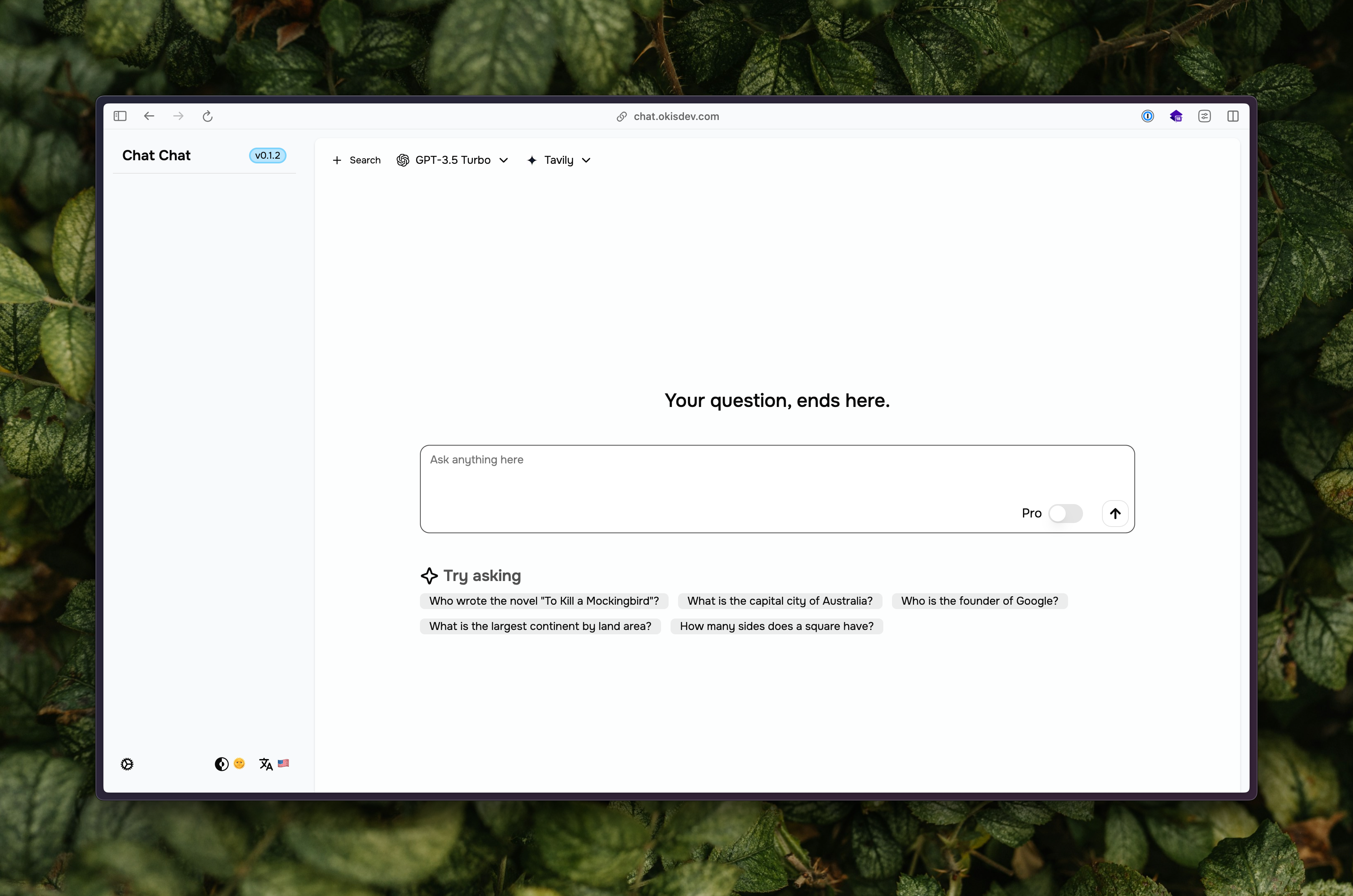

+ +## インターフェイス + + + + + +## 機能 + +- 主要なAIプロバイダーに対応(Anthropic、OpenAI、Cohere、Google Geminiなど) +- 自己ホストが容易 + +## 使用方法 + +[ドキュメント](https://docs.okis.dev/chat) + +## デプロイメント + +[](https://vercel.com/import/project?template=https://github.com/okisdev/ChatChat) + +[](https://railway.app/template/-WWW5r) + +詳細なデプロイ方法は[ドキュメント](https://docs.okis.dev/chat)にて + +## ライセンス + +[AGPL-3.0](./LICENSE) + +## 技術スタック + +nextjs / tailwindcss / shadcn UI + +## 注意 + +- AIは不適切なコンテンツを生成する可能性がありますので、注意してご使用ください。 diff --git a/README.md b/README.md index 96ab943d..80172dcd 100644 --- a/README.md +++ b/README.md @@ -1,128 +1,48 @@ -# [Chat Chat](https://chat.okisdev.com) +# Chat Chat -> Chat Chat to unlock your next level AI conversational experience. You can use multiple APIs from OpenAI, Microsoft Azure, Claude, Cohere, Hugging Face, and more to make your AI conversation experience even richer. - -[](https://github.com/okisdev/ChatChat/blob/master/LICENSE) [](https://twitter.com/okisdev) [](https://t.me/+uWx9qtafv-BiNGVk) +> Your own unified chat and search to AI platform, with a simple and easy to use interface.- English | 繁体中文 | 简体中文 | 日本語 + 🇺🇸 | 🇭🇰 | 🇨🇳 | 🇯🇵

Documentation - | Common Issue

-## Important Notes - -- Some APIs are paid APIs, please make sure you have read and agreed to the relevant terms of service before use. -- Some features are still under development, please submit PR or Issue. -- The demo is for demonstration purposes only, it may retain some user data. -- AI may generate offensive content, please use it with caution. - -## Preview - -### Interface - - - - +## Interface -### Functions + -https://user-images.githubusercontent.com/66008528/235539101-562afbc8-cb62-41cc-84d9-1ea8ed83d435.mp4 - -https://user-images.githubusercontent.com/66008528/235539163-35f7ee91-e357-453a-ae8b-998018e003a7.mp4 + ## Features -- [x] TTS -- [x] Dark Mode -- [x] Chat with files -- [x] Markdown formatting -- [x] Multi-language support -- [x] Support for System Prompt -- [x] Shortcut menu (command + k) -- [x] Wrapped API (no more proxies) -- [x] Support for sharing conversations -- [x] Chat history (local and cloud sync) -- [x] Support for streaming messages (SSE) -- [x] Plugin support (`/search`, `/fetch`) -- [x] Support for message code syntax highlighting -- [x] Support for OpenAI, Microsoft Azure, Claude, Cohere, Hugging Face - -## Roadmap - -Please refer to https://github.com/users/okisdev/projects/7 +- Support major AI Providers (Anthropic, OpenAI, Cohere, Google Gemini, etc.) +- Ease self-hosted ## Usage -### Prerequisites - -- Any API key from OpenAI, Microsoft Azure, Claude, Cohere, Hugging Face - -### Environment variables - -| variable name | description | default | mandatory | tips | -| ----------------- | --------------------------- | ------- | --------- | ----------------------------------------------------------------------------------------------------------------- | -| `DATABASE_URL` | Postgresql database address | | **Yes** | Start with `postgresql://` (if not required, please fill in `postgresql://user:password@example.com:port/dbname`) | -| `NEXTAUTH_URL` | Your website URL | | **Yes** | (with prefix) | -| `NEXTAUTH_SECRET` | NextAuth Secret | | **Yes** | Random hash (16 bits is best) | -| `EMAIL_HOST` | SMTP Host | | No | | -| `EMAIL_PORT` | SMTP Port | 587 | No | | -| `EMAIL_USERNAME` | SMTP username | | No | | -| `EMAIL_PASSWORD` | SMTP password | | No | | -| `EMAIL_FROM` | SMTP sending address | | No | | - -### Deployment +[docs](https://docs.okis.dev/chat) -> Please modify the environment variables before deployment, more details can be found in the [documentation](https://docs.okis.dev/chat/deployment/). - -#### Local Deployment - -```bash -git clone https://github.com/okisdev/ChatChat.git -cd ChatChat -cp .env.example .env -pnpm i -pnpm dev -``` - -#### Vercel +## Deployment [](https://vercel.com/import/project?template=https://github.com/okisdev/ChatChat) -#### Zeabur - -Visit [Zeabur](https://zeabur.com) to deploy - -#### Railway - [](https://railway.app/template/-WWW5r) -#### Docker - -```bash -docker build -t chatchat . -docker run -p 3000:3000 chatchat -e DATABASE_URL="" -e NEXTAUTH_URL="" -e NEXTAUTH_SECRET="" -e EMAIL_HOST="" -e EMAIL_PORT="" -e EMAIL_USERNAME="" -e EMAIL_PASSWORD="" -e EMAIL_FROM="" -``` - -OR - -```bash -docker run -p 3000:3000 -e DATABASE_URL="" -e NEXTAUTH_URL="" -e NEXTAUTH_SECRET="" -e EMAIL_HOST="" -e EMAIL_PORT="" -e EMAIL_USERNAME="" -e EMAIL_PASSWORD="" -e EMAIL_FROM="" ghcr.io/okisdev/chatchat:latest -``` +more deployment methods in [docs](https://docs.okis.dev/chat) ## LICENSE [AGPL-3.0](./LICENSE) -## Support me +## Stack -[](https://www.buymeacoffee.com/okisdev) +nextjs / tailwindcss / shadcn UI -## Technology Stack +## Note -nextjs / tailwindcss / shadcn UI +- AI may generate inappropriate content, please use it with caution. diff --git a/README.zh_CN.md b/README.zh_CN.md new file mode 100644 index 00000000..51567e11 --- /dev/null +++ b/README.zh_CN.md @@ -0,0 +1,48 @@ +# Chat Chat + +> 您自己的统一聊天和搜索至AI平台,界面简单易用。 + + + ++ + 文档 + +

+ +## 界面 + + + + + +## 特点 + +- 支持主要的AI提供商(Anthropic、OpenAI、Cohere、Google Gemini等) +- 方便自托管 + +## 使用方式 + +[文档](https://docs.okis.dev/chat) + +## 部署 + +[](https://vercel.com/import/project?template=https://github.com/okisdev/ChatChat) + +[](https://railway.app/template/-WWW5r) + +更多部署方法见[文档](https://docs.okis.dev/chat) + +## 许可证 + +[AGPL-3.0](./LICENSE) + +## 技术栈 + +nextjs / tailwindcss / shadcn UI + +## 注意事项 + +- AI可能会生成不适当的内容,请谨慎使用。 diff --git a/README.zh_HK.md b/README.zh_HK.md new file mode 100644 index 00000000..92bae77a --- /dev/null +++ b/README.zh_HK.md @@ -0,0 +1,48 @@ +# Chat Chat + +> 你的一體化聊天及搜索人工智能平台,界面簡單易用。 + + + ++ + 文件 + +

+ +## 介面 + + + + + +## 功能 + +- 支援主要人工智能供應商(Anthropic、OpenAI、Cohere、Google Gemini 等) +- 方便自行託管 + +## 使用方式 + +[文件](https://docs.okis.dev/chat) + +## 部署 + +[](https://vercel.com/import/project?template=https://github.com/okisdev/ChatChat) + +[](https://railway.app/template/-WWW5r) + +更多部署方法在[文件](https://docs.okis.dev/chat) + +## 許可證 + +[AGPL-3.0](./LICENSE) + +## 技術棧 + +nextjs / tailwindcss / shadcn UI + +## 注意事項 + +- 人工智能可能會生成不當內容,請小心使用。 diff --git a/app/[locale]/(auth)/layout.tsx b/app/[locale]/(auth)/layout.tsx deleted file mode 100644 index 7b92e5e6..00000000 --- a/app/[locale]/(auth)/layout.tsx +++ /dev/null @@ -1,29 +0,0 @@ -import Image from 'next/image'; - -import { redirect } from 'next/navigation'; - -import { getCurrentUser } from '@/lib/auth/session'; - -import { siteConfig } from '@/config/site.config'; - -export default async function AuthLayout({ children }: { children: React.ReactNode }) { - const user = await getCurrentUser(); - - if (user) { - redirect('/dashboard/profile'); - } - - return ( -{siteConfig.title}

-No conversation records found

- )} -No teams found

} -{t(whatTimeOfDay())}

+404 Not Found

-The source you requested is unavailable.

- -... not found ...

{t('Sign In')}

-- {t('New User?')}{' '} - - {t('Sign up')} - -

- - ) : ( - <> -{t('Register')}

-- {t('Already have an account with us?')}{' '} - - {t('Log In')} - -

- - )} -{t('Beta Notice')}

-- {t( - 'We are continually adding new features to the dashboard which may affect your service and experience, as well as re-impacting the database architecture, and if you are a general user, we do not recommend that you log in or register, as at the moment it is possible to use almost all features without logging in' - )} -

-{siteConfig.title}

-{user?.name}

-{user?.email}

-{t(description)}

-{t('Danger Zoom')}

-- {t('If you want to permanently remove your account')}. {t('Please click the button below, please note, This could not be undone')} -

- -{record.title}

-{formatDate(record.createdAt)}

-{t('Share')}

-{formatDate(record.updatedAt)}

-{t(item.name)}

- {item.children && ( -{t(item.name)}

- {item.children && ( -{customConfig.Dashboard.side}

-{team.name}

-{team.accessCode}

-{isUser ? t('You') : serviceProvider}

-{chatTitle ?? chatTitleResponse}

- {(serviceProvider == 'OpenAI' || serviceProvider == 'Team' || serviceProvider == 'Azure') && ( -{t(CodeModeConfig.find((mode) => mode.name === codeMode)?.hint)}

-