-

Notifications

You must be signed in to change notification settings - Fork 185

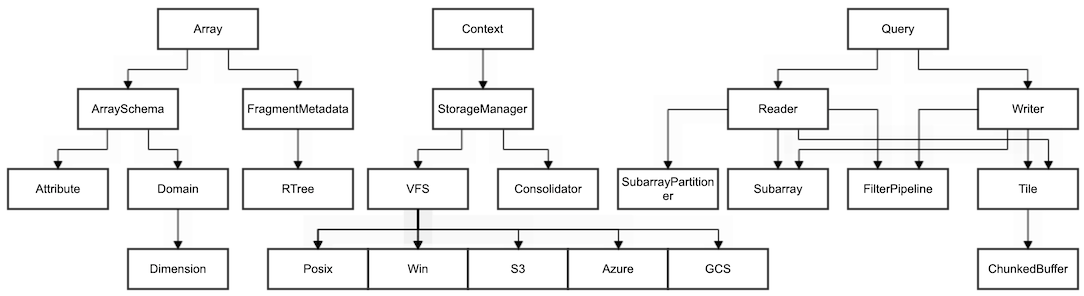

Architecture

This is a broad but non-exhaustive ownership graph of the major classes within the TileDB core.

Array: An in-memory representation of a single on-disk TileDB array.

ArraySchema: Defines an array.

Attribute: Defines a single attribut.

Domain: Defines the array domain.

Dimension: Defines a dimension within the array domain.

FragmentMetadata: An in-memory representation of a single on-disk fragment's metadata.

RTree: Contains minimum bounding rectangles (MBRs) for a single fragment.

Context: A session state.

StorageManager: Facilitates all access between the user and the on-disk files.

VFS: The virtual filesystem interface that abstracts IO from the configured backend/filesystem.

Posix: IO interface to a POSIX-compliant filesystem.

Win: IO interface to a Windows filesystem.

S3: IO interface to an S3 bucket.

Azure: IO interface to an Azure Storage Blob.

GCS: IO interface to a Google Cloud Storage Bucket.

Consolidator: Implements the consolidation operations for fragment data, fragment metadata, and array metadata.

Query: Defines and provides state for a single IO query.

Reader: IO state for a read-query.

SubarrayPartioner: Slices a single subarray into smaller subarrays.

Subarray: Defines the bounds of an IO operation within an array.

FilterPipeline: Transforms data between memory and disk during an IO operation, depending on the defined filters within the schema.

Tile: An in-memory representation of an on-disk data tile.

ChunkedBuffer: Organizes tile data into chunks.

Write: IO state for a write-query.

The lowest-level public interface into the TileDB library is through the C API. The symbols from the C++ source are not exported. All other APIs wrap the C API, including the C++ API.

Both the C and C++ APIs are included in the core repository. All other APIs reside in their own repository. Everything in the core source (tiledb/sm/*) except for the c_api and cpp_api directories are inaccessible outside of the source itself.

C API: https://github.com/TileDB-Inc/TileDB/tree/dev/tiledb/sm/c_api

C++ API: https://github.com/TileDB-Inc/TileDB/tree/dev/tiledb/sm/cpp_api

Python API: https://github.com/TileDB-Inc/TileDB-Py

R API: https://github.com/TileDB-Inc/TileDB-R

Go API: https://github.com/TileDB-Inc/TileDB-Go

TODO

TODO

The current on-disk format spec can be found here:

https://github.com/TileDB-Inc/TileDB/blob/dev/format_spec/FORMAT_SPEC.md.

The Array class provides an in-memory representation of a single TileDB array. The ArraySchema class stores the contents of the __array_schema.tdb file. The Domain, Dimension, and Attribute classes represent the sections of the array schema that they are named for. The Metadata class represents the __meta directory and nested files. The FragmentMetadata represents a single __fragment_metadata.tdb file, one per fragment. Tile data (e.g. attr.tdb and attr_var.tdb) is stored within instances of Tile.

The StorageManager class mediates all I/O between the user and the array. This includes both query I/O and array management (creating, opening, closing, and locking). Additionally, it provides state the persists between queries (such as caches and thread pools).

The tile cache is an LRU cache that stores filtered (e.g. compressed) attribute and dimension data. Is it used during a read query. The VFS maintains a read-ahead cache and backend-specific state (for example, an authenticated session to S3/Azure/GCS).

The compute and I/O thread pools are available for use anywhere in the core. The compute thread pool should be used for compute-bound tasks while the I/O thread pool should be used for I/O-bound tasks (such as reading from disk or an S3/Azure/GCS client).

The above is a high-level flow diagram depicting the path of user I/O:

- A user constructs a

Queryobject that defines the type of I/O (read or write) and the subarray to operate on. - The

Queryobject is submitted to theStorageManager. - The

StorageManagerinvokes either aReaderorWriter, depending on the I/O type. - The

FilterPipelineis unfilters data for reads and filters data for writes. - The

VFSperforms the I/O to the configured backend.

TODO

TODO

The VFS (virtual filesystem) provides a filesystem-like interface file management and IO. It abstracts one of the six currently-available "backends" (sometimes referred to as "filesystems"). The available backends are: POSIX, Windows, AWS S3, Azure Blob Storage, Google Cloud Storage, and Hadoop Distributed File System.

The read path serves two primary functions:

- Large reads are split into smaller batched reads.

- Modifies small read requests to read-ahead more bytes than requested. After the read, the excess bytes are cached in-memory. Read-ahead buffers are cached by URI in an LRU policy. Note that attribute and dimension tiles are bypassed, because that data is cached in the Storage Manager's tile cache.

If reads are serviceable through the read-ahead cache, no request will be made to the backend.

The write path directly passes the write request to the backend, deferring parallelization, write caching, and write flushing. Writes will invalidate the cached read-ahead buffers for the associated URIs.

TODO

TODO

TODO

TODO

TODO

TODO

TODO

TODO